Carl Saucier-Bouffard

N.B. Please note that the text below is a “work in progress” and is currently incomplete. It will be revised and completed at a later date. – C.S-B.

A. Introduction

The readings that I have completed as part of my WID fellowship have helped me recognize the truth of the following words of wisdom: “Tell me and I forget; teach me and I may remember; involve me and I will learn.” (Xun Kuang, Xunzi, book 8, chapter 11) Taking part in the WID program has greatly enriched my teaching by helping me design and integrate into my courses many active learning activities, collaborative small group tasks, paired interviews, exploratory writing activities, and formal assigments that have more creative elements than my standard thesis-governed essays. In the past year, I have also integrated new communication technologies into my courses. For instance, in order to experiment with the “flipped classroom” model, I have co-created a few educational videos, including one on the legal rights of great apes (http://vimeo.com/72014721), which I use in conjunction with the novel Ishmael as part of a Reflections seminar.

Given that what I consider to be my most challenging and burdensome professional duty is to assign a numerical grade to the multiple essays written by each of my 160 students, the focus of this portfolio will be the task-specific grading rubrics that I have developed out of my experience as a WID fellow.

My thoughts on this issue have been influenced mostly by my reading and discussion of John C. Bean’s Engaging Ideas – The Professor’s Guide to Integrating Writing, Critical Thinking, and Active Learning in the Classroom. I have also greatly benefitted from my discussions with Ian Mackenzie and Anne Thorpe, and from the grading rubrics that former WID fellows Robert Stephens and Sarah Allen have generously agreed to share with me. In order to explore the many possibilities offered by grading rubrics, I have consulted the database of the plagiarism-prevention website turnitin.com . On this website, one can find an online grading tool that allows teachers to choose from (and, in most cases, download) more than forty pre-created grading rubrics.

My thoughts on this issue have been influenced mostly by my reading and discussion of John C. Bean’s Engaging Ideas – The Professor’s Guide to Integrating Writing, Critical Thinking, and Active Learning in the Classroom. I have also greatly benefitted from my discussions with Ian Mackenzie and Anne Thorpe, and from the grading rubrics that former WID fellows Robert Stephens and Sarah Allen have generously agreed to share with me. In order to explore the many possibilities offered by grading rubrics, I have consulted the database of the plagiarism-prevention website turnitin.com . On this website, one can find an online grading tool that allows teachers to choose from (and, in most cases, download) more than forty pre-created grading rubrics.

B. The Problems with Numerical Grades

I have always found the task of assigning numerical grades (on many types of assignments or on the overall performance of students) to be problematic. Like many of my colleagues, I am of the opinion that, for many types of graded work (including blog or discussion forum postings, thinking pieces, reading summaries, journal entries or other forms of exploratory writing), the fairest grading system is a “pass / fail” one.[1] I would also contend that assigning numerical grades to some formal assignments (including the critical analysis essays that my students have to write as part of the course “The Ethics of What We Eat”) may sometimes stifle students’ learning. From my discussions with numerous students, I know that many of them regularly face the following dilemma: to explore additional readings and run the risk of performing slightly less well on assignments or to focus exclusively on the readings that will be under evaluation in their assignments and probably get a higher grade.[2]

Another important problem with assigning numerical grades to essays is what Bean calls the “problem of implied precision” (Bean, Engaging Ideas, p.279). Simply put, numerical grades convey a sense of objectivity that is not authentic. When teachers assign a grade of 88% to an essay, it seems that the implicit message that they send is that this essay does not deserve 90% or 86% (but 88%). In reality, can they really tell the difference between an essay that deserves 86% and one that deserves 90%? I have my doubts. If the same teacher were to grade the same essay months later, using the same evaluation criteria, would she / he assign the same numerical grades? Again, I have my doubts. On a related note, Bean points out that some teachers may falsely assume that their grading criteria are universal and that they therefore do not need to make them explicit to their students. In fact, as clearly shown by the work of Paul Diederich (1974), there is no universal, unspoken, and implicit grading criteria used by all (or most) teachers to assign numerical grades to essays (Bean, p.268). Evaluation criteria and the reasoning that produce different numerical grades vary enormously amongst teachers (Bean, p.268).

Diederich collected three hundred essays written by first-year students at three different universities and had them graded by fifty-three professionals in six different occupational fields. He asked each reader to place the essays in nine different piles in order of ‘general merit’ and to write a brief comment explaining what he or she liked and disliked about each essay. Diederich reported these results: ‘Out of the 300 essays graded, 101 received every grade from 1 – 9; 94 percent received either seven, eight, or nine different grades; and no essay received less than five different grades’ (p.6). (Bean, p.268)

If these 53 teachers could not agree on what grade, from 1 to 9, each of these 300 essays deserve, it seems safe to assume that, if they had been asked to assign a numerical grade from 1 to 100, their grades would have varied even more.

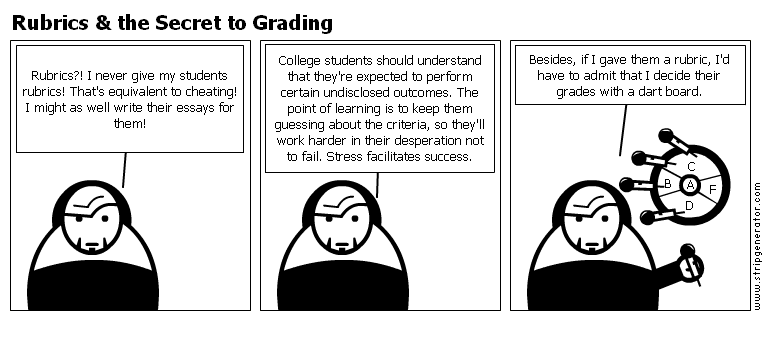

C. One Partial Solution: Task-Specific Grading Rubrics

Professor John C. Bean makes a convincing case that task-specific grading rubrics could partly solve the problem of widely inconsistent numerical grades, given that they clarify and remind the evaluators of the criteria for evaluation (pp.269-270). Using grading rubrics could be one of the best ways to arrive at consistent and relatively fair grades for essays and other assignments. There are many other advantages to using a grading rubric. For instance, grading rubrics can be easily shared with students and it provides them with a clear explanation of our criteria for evaluation. Furthermore, like Bean (p.289), I believe that grading rubrics, once filled out, provide meaningful feedback to students. If properly designed, rubrics can highlight the main strengths and weaknesses of assignments.

In my own experience, by sharing the grading rubrics with students a few weeks before the assignment is due, I make sure that students know what is expected of them and that they can use the rubrics as they revise their assignment. As I send the filled-out rubrics to my students once their assignments are graded, they very rarely complain about their grades. The rubrics clearly help each of them understand why their assignment has received a given grade. Students are also given valuable feedback that they can use to improve their writing.

Two Examples of Task-Specific Grading Rubrics

Two Examples of Task-Specific Grading Rubrics

First Example

As part of my Reflections seminar (which tackles the environmental world views of the so-called “boomsters” and “doomsters”), my students have to write an imaginary dialogue between Ishmael (the protagonist of the novel Ishmael) and Zira (one of the main characters of the movie Planet of the Apes). In this written assignment, students have to role-play the opposing views of Ishmael and Zira in an imaginary conversation about helping humans to thrive on the Planet of the Apes. Here are the detailed instructions that I share with my students two or three weeks before the due date.

To help me assign a grade to this assignment, I use a task-specific grading rubric that is built on the following four criteria:

Understanding of the arguments made by Ishmael (i.e. Are Ishmael’s arguments summarized with clarity, accuracy, and nuance?)

Understanding of the position held by Zira (Is Zira’s position summarized with clarity, precision, and nuance?)

Support (i.e. Does the dialogue include at least 7 passages paraphrased from the novel Ishmael, and are the best supporting passages possible chosen? Is each paraphrased passage identified through a clear page citation?)

Writing mechanics (i.e. How many grammar and / or spelling mistakes does the assignment include?)

Earlier this term, after having shown this grading rubric to my students, I asked them to challenge my criteria of evaluation and, if possible, to refine them. Only one of my students has argued for a radical change to my rubric: to get rid of the “writing mechanics” criterion. We then debated the merits of this criterion. When I put my student’s motion to the vote, the overwhelming majority of my students voted against the motion and in favour of keeping the grading rubric as it is. The in-class discussion surrounding this grading rubric has had many advantages. For one thing, it has led me to better justify the importance of each of these four criteria of evaluation.

Later this term, one week before this assignment is due, I will hold a peer-review workshop, in which my students will read the assignment of another student. They will then have to fill out my grading rubric and comment on the assignment. I believe that this workshop will probably generate useful feedback and will deepen students’ understanding of the four criteria of my grading rubric.

Second Example

As part of my critical thinking course (entitled “Knowledge of the Human Mind”), my students have to complete an oral presentation towards the end of the term. In order to grade it, I rely on a grading rubric built on these five criteria:

Thesis statement (i.e. Does the presentation include a clear, relevant, and precise thesis statement that directly answers the assignment’s question?)

Logical validity of the argument (i.e. How well do the premises logically support the thesis statement? Is the argument presented logically valid?)

Truth of the premises (i.e. Based on the evidence offered, to what extent is it reasonable to assume that each premise is true? Is each of the premises supported by at least one peer-reviewed academic article or book?)

Legitimate objection to their argument and their reply to it (i.e. Does the presentation end with a legitimate objection to the argument presented? Is a valid reply to this objection offered?)

Content organization (i.e. How well is the content of your presentation organized? Does the presenter rely on a clear and concise PowerPoint presentation (or other visual materials)?

This rubric is shared and explained in great detail to my students two or three weeks before the date of their presentation. Prior to their oral presentation, which takes place at the end of the term, my students spend four weeks reading and analyzing the textbook Critical Thinking For College Students, authored by Denise Albert. This book, as well as eight of my lectures, are devoted to the concepts of “thesis statement,” “logical validity,” “truth of a premise,” and “soundness.”

During each oral presentation, I handwrite a lot of comments on the rubric. I then give each student the chance to reply to some of my criticisms and objections. The exchange that follows each presentation is very useful to determine a fair and accurate grade for each presenter. Using Microsoft Excel, I then highlight each box that corresponds to the performance of the presenter and I type my final comments in these boxes. Here is one example.

D. Grading Rubrics Are Not Perfect

One problem with grading rubrics is that, as they are designed to assess a limited number of specific competencies, this could be done at the expense of overall understanding and knowledge. It could be argued that grading rubrics may be too simplistic to accurately assess students’ overall learning. I think that they definitely assess one specific kind of learning (just like I.Q. tests assess one specific kind of knowledge) but is this enough? Would a grading rubric designed to grade a 1,500 word essay really help me properly assess my students’ thinking process and what they learned during the past 15 hours of classes? It could be argued that any such evaluation tool assesses the extent to which students are able to understand the criteria of my rubric and meet them. Would this be a good indicator of their overall understanding and knowledge? Perhaps not.

But rubrics do not have to be the ‘final arbiter’ as to the precise numerical grade we assign to assignments. Rubrics could rather be used as a guide, helping us determine a fair range in which the grade of an assignment should be. An essay that demonstrates superior understanding of the concepts and ideas studied in class could obtain a numerical grade at the upper end of this range. The accompanying teacher’s comments could explain why an assignment deserves a grade at the lower end or at the upper end of the range determined by the rubric.

[1] It may be that the fairest grading system for the overall performance of students is also a “pass / fail” one. Such a grading system could have the advantages of reducing the unhealthy stress of our students and fostering group cohesion (see http://www.mayoclinicproceedings.org/article/S0025-6196(11)61250-0/pdf).

[2] When I was a university student, one of the courses where I experienced the greatest intellectual development was my Normative Ethics course. Why? Because my Ethics professor (the late G.A. Cohen, who had always promoted egalitarian policies) was given exceptional permission to assign only two types of grades: pass or fail.